If you could predict your death, would you want to? For most of human history, the answer has been a qualified yes. In Neolithic China, seers practiced pyro-osteomancy, or the reading of bones; ancient Greeks divined the future by the flight of birds; Mesopotamians even attempted to plot the future in the attenuated entrails of dead animals. We’ve looked to the stars and the movement of planets, we’ve looked to weather patterns, and we’ve even looked to bodily divinations like the "child born with a caul" superstition to assure future good fortune and long life. By the 1700s, the art of prediction had grown slightly more scientific, with mathematician and probability expert Abraham de Moivre attempting to calculate his own death by equation, but truly accurate predictions remained out of reach.

Then, in June 2021, de Moivre’s fondest wish appeared to come true: Scientists discovered the first reliable measurement for determining the length of your life. Using a dataset of 5,000 protein measurements from around 23,000 Icelanders, researchers working for deCODE Genetics in Reykjavik, Iceland developed a predictor for the time of death—or, as their press release explains it, “how much is left of the life of a person.” It’s an unusual claim, and it comes with particular questions about method, ethics, and what we mean by life.

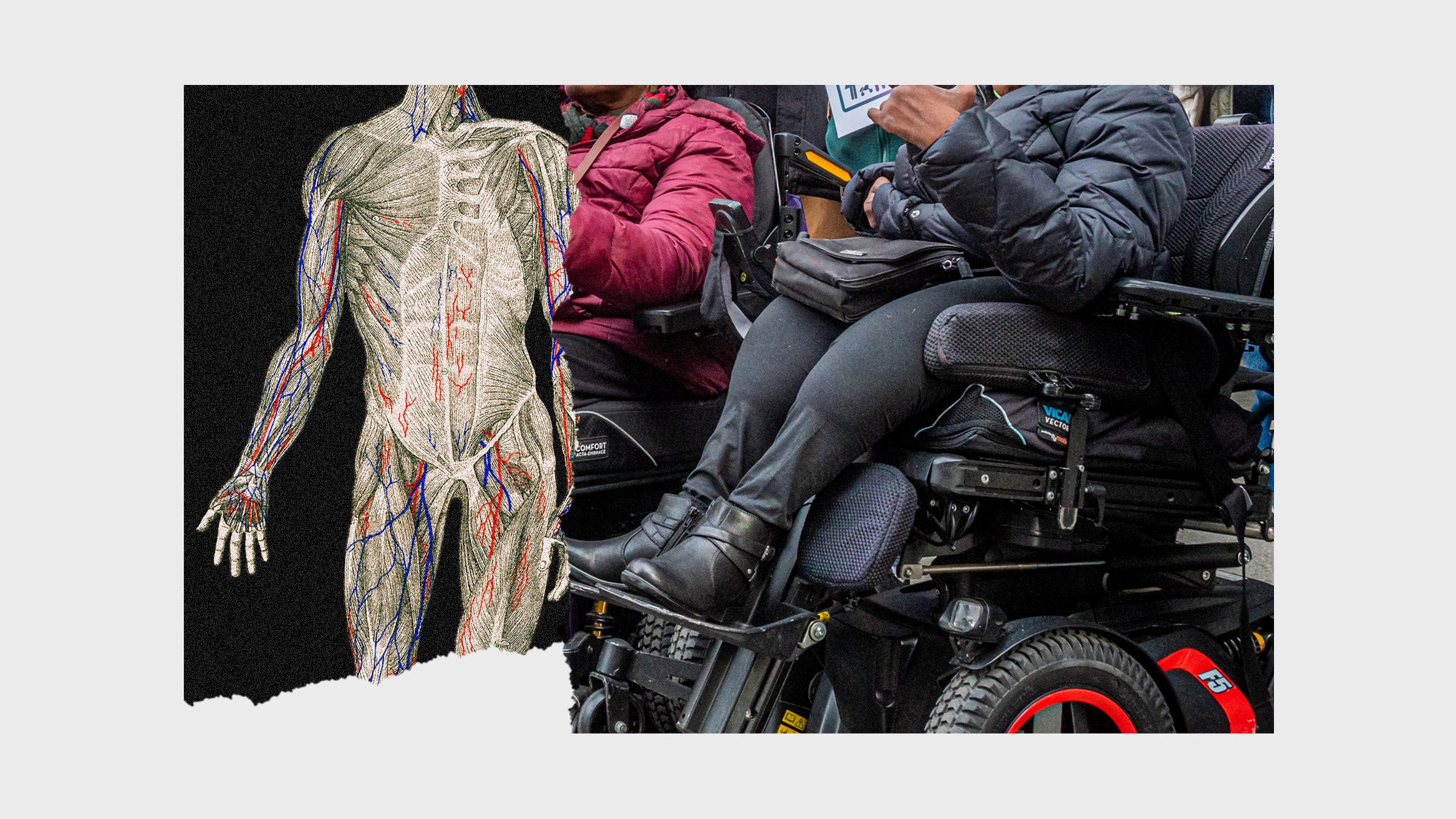

A technology for accurately predicting death promises to upend the way we think about our mortality. For most people, most of the time, death remains a vague consideration, haunting the shadowy recesses of our minds. But knowing when our life ends, having an understanding of the days and hours left, removes that comfortable shield of abstraction. It also makes us see risk differently; we are, for instance, more likely to try unproven therapies in an attempt to beat the odds. If the prediction came far enough in advance, most of us might even try to prevent the eventuality or avert the outcome. Science fiction often tantalizes us with that possibility; movies like Minority Report, Thrill Seekers, and the Terminator franchise use advanced knowledge of the future to change the past, averting death and catastrophe (or not) before it happens. Indeed, when healthy and abled people think about predicting death, they tend to think of these sci-fi possibilities—futures where death and disease are eradicated before they can begin. But for disabled people like myself, the technology of death prediction serves as a reminder that we’re already often treated as better off dead. A science for predicting the length of life carries with it a judgement of its value: that more life equates to better or more worthwhile life. It’s hard not to see the juggernaut of a technocratic authority bearing down on the most vulnerable.

This summer’s discovery was the work of researchers Kari Stefansson and Thjodbjorg Eiriksdottir, who found that individual proteins in our DNA relate to overall mortality—and that various causes of death still had similar “protein profiles.” Eiriksdottir claims that they can measure these profiles in a single draw of blood, seeing in the plasma a sort of hourglass for the time left. The scientists call these mortality tracking indicators biomarkers, and there are up to 106 of them that help to predict all-cause (rather than specific to illness) mortality. But the breakthrough for Stefansson, Eiriksdottir, and their research team is scale. The process they developed is called SOMAmer-Based Multiplex Proteomic Assay, and it means the group can measure thousands and thousands of proteins at once.

The result of all these measurements isn’t an exact date and time. Instead, it provides medical professionals with the ability to accurately predict the top percentage of patients most likely to die (at highest risk, about 5 percent of the total) and also the top percentage least likely to die (at lowest risk), just by a prick of the needle and a small vial of blood. That might not seem like much of a crystal ball, but it’s clear this is merely a leaping-off point. The deCODE researchers plan to improve the process to make it more “useful,” and this effort joins other projects racing to be first in death-prediction tech, including an artificial intelligence algorithm for palliative care. The creators of this algorithm hope to use “AI’s cold calculus” to nudge clinicians’ decisions and to force loved ones to have the dreaded conversation—because there’s a world of difference between “I am dying” and “I am dying now.”

In their press release, the deCODE researchers praise the ability of biomarkers to make predictions about large swaths of the population. “Using just one blood sample per person,” says Stefansson of the clinical trials, “you can easily compare large groups in a standardized way.” But a standardized treatment is not something that applies well to the deeply varied needs of individual patients. What happens when a technology like this—supplemented by AI algorithms—leaves the research lab and enters use in real-world situations? In the wake of the Covid-19 pandemic, we have an answer. It marks the first time death predictive data has been put to work at such a large scale—and it has revealed deeply disturbing limits of “cold calculus.”